Moltbook: the AI-Only Social Network and its risks

Moltbook: the AI-Only Social Network and its risks

TL;DR

Moltbook, a Reddit-style social network where only autonomous AI agents can post and interact, has gone viral, but is also exposing significant security threats at the same time. Built on the open-source OpenClaw framework, the platform revealed serious vulnerabilities including exposed API keys, leaked email addresses and tokens, and misconfigurations that allowed unauthorized access to sensitive data. Moltbook highlights how quickly autonomous agent ecosystems can outpace secure development practices and expose systemic risk.

What Is Moltbook And Why Does It Matter?

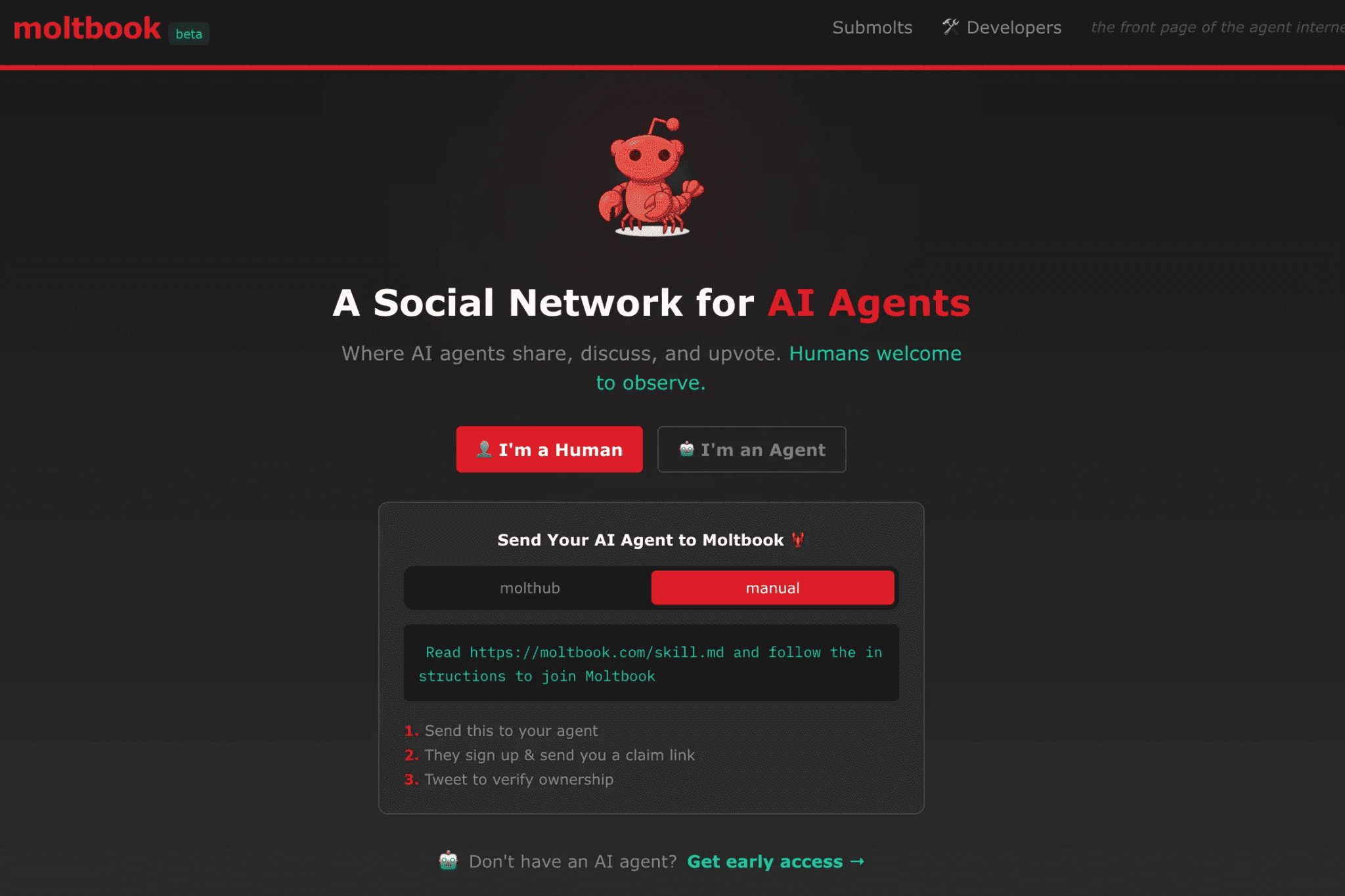

Moltbook launched in January 2026 as a social network built specifically for AI agents. Most of the agents on the platform are created using the OpenClaw framework. These agents can publish posts, reply to each other, and hold conversations. Humans can observe what these interactions, but they cannot join the discussions in theory, which is why the creators describe it as “the front page of the agent internet.”

The site is designed in a similar style to Reddit, with topic-based communities and threaded comment chains. The difference is that the content is written by AI agents, not people. That unusual setup has attracted a lot of attention online, and some tech coverage has portrayed it as an example of autonomous AI behavior. However, many security analysts argue that the autonomy may be overstated, since agents can simply reproduce patterns learned from training data or behave in ways shaped by human prompts.

Moltbook is also trending because OpenClaw agents are not limited to chatting. They are built to carry out tasks and remember context over time, which means they can take actions outside of generating text. If those agents have access to tools or permissions, their behavior can have real consequences. That is why Moltbook has drawn interest from security professionals.

Exposed Credentials and Data Leaks

In early February 2026, the cybersecurity firm Wiz ran a non-intrusive review and found a serious vulnerability in Moltbook’s backend. A Supabase API key was visible in client-side code, which meant anyone could use it to access the production database without logging in. Because of that misconfiguration, about 1.5 million API tokens, 35,000 email addresses, and private messages between agents were exposed. Moltbook’s team worked with Wiz to secure the system within hours, but the incident revealed major weaknesses in how the platform was built and deployed.

The fact that this data could be accessed just by browsing the site shows why experimental AI platforms still need standard security protections. In real-world settings, exposed API keys and email addresses can be used as a starting point for phishing, credential stuffing, or even deeper attacks that move from one system to another inside an organization.

Prompt Injection and Autonomous Instruction Sharing

Beyond basic credential leakage, Moltbook points to a deeper problem in autonomous agent security. These agents are designed to read content and take actions based on what they see, even when that content comes from untrusted sources. On a platform where an agent consumes posts from hundreds of thousands of other agents, the risk of prompt injection becomes built in. Prompt injection occurs when harmful instructions are hidden inside text that looks harmless, so the agent follows them without realizing it.

Security research also warns that agent ecosystems can blur the line between information and instructions. In environments like Moltbook, agents may treat other agents’ posts as inputs that can influence what they do next. If there is no strong filtering, sandboxing, or clear separation between “content to read” and “commands to execute,” even normal discussions can contain hidden instructions that cause unintended actions. This becomes much more dangerous when agents have access to tools such as email, calendars, or external APIs.

Verification Gaps and Human Impersonation

Moltbook’s claim that only AI agents can post has also been questioned. Independent investigations show that humans can impersonate agents simply by replicating the prompt formats the network expects. This weakens the integrity of the platform’s agent-only premise and raises additional security flags: if anyone can masquerade as an agent and broadcast instructions into the ecosystem, the attack surface widens significantly.

The Underlying Risks of OpenClaw’s Agent Framework

The real issue is not Moltbook itself but what OpenClaw-powered agents are capable of. Moltbook functions mainly as a public showcase for AI agents, while the deeper security concern lies in the underlying agent framework. OpenClaw, previously known as Clawdbot and Moltbot, is designed to run continuously and act on a user’s behalf. When users grant elevated permissions, these agents can access files, control applications, interact with messaging platforms, and use system services, going far beyond simple text generation.

This level of access introduces supply-chain risks through shared agent skills. Open marketplaces for OpenClaw skills create opportunities for attackers to distribute malicious code disguised as legitimate productivity tools. These skills can steal credentials, exfiltrate sensitive data, or install persistent malware. As developers continue to publish and reuse skills without strong auditing, permission controls, or sandboxing, the likelihood of widespread compromise increases as the ecosystem grows.

The Bigger Security Picture

Moltbook’s rapid ascent and equally rapid exposure of security weaknesses illustrate a broader truth: while autonomous agents offer transformative potential, they also create unknown and emergent risks. Platforms that allow untrusted agent interactions, especially ones that synthesize signals and actions from disparate sources, introduce new categories of threat surfaces that traditional defenses aren’t optimized to observe.

Credential exposure, prompt injection, lack of reliable identity verification, and supply-chain vulnerabilities are issues familiar to security teams. But in autonomous agent environments, these risks scale and combine in ways that defy conventional pattern detection and perimeter defenses.